As you probably know if you’ve been following the discussions regarding the best way to deal with the pandemic, there has been a fierce debate about whether allowing the epidemic to run its course until we’ve reached “herd immunity” and it starts receding was the right thing to do. I’m increasingly annoyed by how epidemiologists and experts in related fields have contributed to this debate, so I wanted to make a few observations in response to some of the things some of them have said. As those of you who follow me on Twitter know, I’m working on a very detailed piece about the narrative that China engaged in a massive cover-up of the outbreak in Wuhan for weeks or even months, which to be clear I think is total bullshit, so I don’t have a lot of time for this post and therefore it’s going to be quick and dirty, but I still needed to get it off my chest.

In particular, although I increasing think lifting the stay-at-home orders as soon as possible and letting the epidemic run its course is the only realistic option for most and perhaps even all countries, I’m not going to defend that view in this post. (To be clear, I wasn’t in favor of this strategy 2 months ago, because at the time I thought we had alternatives that were preferable, but we didn’t do what needed to be done then and now I think that ship has already sailed in most countries.) It’s also fine with me if you don’t agree with that view, I think it’s something reasonable people can disagree about. But that’s precisely why it annoys me when people try to pretend it’s not and suggests that anyone who supports this strategy just doesn’t understand the science, especially when they’re experts a lot of people listen to, because this is bullshit and, whether or not they realize that, they sure as hell ought to realize it. Of course, it’s fine if they are personally opposed to this strategy and want to argue that it would be a mistake, but this is a political opinion and not a scientific one and they have a responsibility to make that clear.

Carl T. Bergstom and Natalie Dean, whom you probably know if you’re following the debates about COVID-19 on Twitter, recently published an op-ed in the New York Times against the “herd immunity” strategy that illustrates the kind of problems I’m talking about. (By the way, although I’m going to criticize them, this doesn’t mean I think they have nothing interesting to say. On the contrary, I have learned a lot by reading stuff they’ve written since the beginning of the pandemic, but it doesn’t mean everything they say is right. Indeed, what I’m talking about in this post doesn’t even exhaust my concerns about their piece, but like I said I’m not trying to defend the “herd immunity” strategy here, so that’s a story for another time.) In that op-ed, they explain the concept of “herd immunity” and, in particular, clarify that the epidemic wouldn’t stop immediately after “herd immunity” had been reached, but that people would continue to get infected for a while after that.

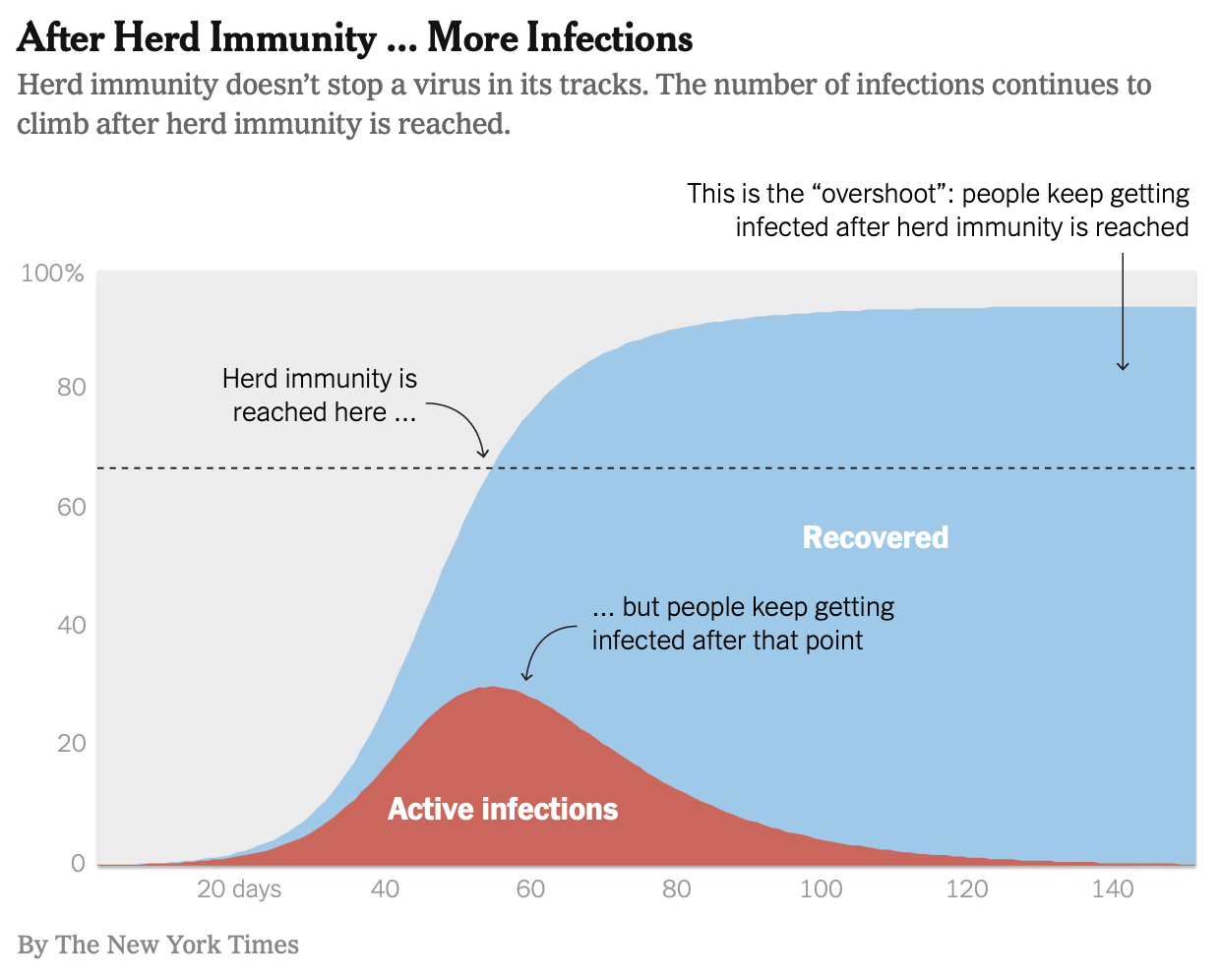

They illustrate their discussion with this chart: As you can see on this chart, “heard immunity” is just the point where enough people have been infected and have developed immunity against the virus that the epidemic starts receding, because the virus has a harder time finding hosts it can infect, but people continue to get infected for a while after that.

As you can see on this chart, “heard immunity” is just the point where enough people have been infected and have developed immunity against the virus that the epidemic starts receding, because the virus has a harder time finding hosts it can infect, but people continue to get infected for a while after that.

The problem with this chart is that it’s based on a naive model that, among other things, assumes that nothing is done in order to mitigate the epidemic while trying to achieve “herd immunity” and that people’s behaviors are exactly what they used to be when the epidemic started and nobody knew anything about social distancing. But that’s obviously not what people who are in favor of using something like Sweden’s strategy, which is explicitly mentioned in the op-ed, want to do and it’s also not what’s going to happen when stay-at-home orders are lifted, so it’s extremely misleading to use that chart in a piece whose goal is precisely to argue against such a strategy. Another problem is that, as I had noted on Twitter even before that op-ed was published (not that I came up with this or was the only one to make that point), this kind of models also make unrealistic assumptions about heterogeneity:

A lot of people claim that, given that even in NYC only ~20% of people seem to have been infected, we are still a long way from herd immunity, but I'm actually not sure this is correct for the kind of reasons outlined by this guy in a comment to @tylercowen's controversial post. pic.twitter.com/e2koLTSdr9

— Philippe Lemoine (@phl43) April 24, 2020

As I made clear in the tweet immediately after that one, not that many people paid attention to that, I’m obviously not saying that 10% is enough to reach “herd immunity”, that’s not the point. The point is that you can’t assume that, if we tried to let the epidemic run its course in a controlled way (as Sweden is doing), something like 90% of people would end up being infected, because this estimate is based on a model that doesn’t take into account things like heterogeneity and network structure, which could make a significant difference.

Many people replied by saying that it’s obvious that, whatever effect heterogeneity might have, it can’t be practically significant, but it’s not obvious and they don’t actually know that. As I keep stressing since I wrote about the Imperial College model, despite what people seem to think, intuition is totally useless on those issues. We have to rely on modeling, but as I also explained in my post about the Imperial College model, modeling the epidemic of COVID-19 poses very serious epistemological issues that aren’t going to go away just because epidemiologists assure me they know what they’re doing. Frankly, given what some of them have done since the beginning of this crisis, I think I can be forgiven for not assuming they know what they’re doing. (Lest you forget, the now infamous IHME model was put together by epidemiologists, not by random people on the Internet. To be fair, Bergstrom did criticize the IHME model early on, but he criticized it for being too optimistic, which I don’t think is really the issue with the model. Anyway, the question of why the IHME model did poorly is complicated, so let’s leave this discussion for another time.) Again, it’s hardly obvious what effect heterogeneity has on the threshold of infection required to reach “herd immunity”, so this question deserves to be addressed seriously.

This is why it’s been pretty disappointing that, every time I’ve brought up this issue, the only response I’ve gotten from epidemiologists and the people defending them has been this kind of knee-jerk reaction:

In which a comment by a pseudonymous CS startup guy on a blog about epidemiologists' GRE scores erases overrides decades of work in network epidemiology. https://t.co/wazfoTXENX

— Carl T. Bergstrom (@CT_Bergstrom) May 5, 2020

As I said in response to Bergstrom, everyone in this discussion has already agreed that the first sentence of that comment was dumb, so why doesn’t he address the substantive point instead of rehashing that? I have no doubt that epidemiologists are aware that heterogeneity could affect the estimate of “herd immunity”, but I also don’t care and neither should you. It doesn’t help anyone if this awareness doesn’t inform what they tell the public and/or decision-makers. So what I care about is that, when they talk to the public about policy, epidemiologists adequately take into account heterogeneity and the uncertainty that results from it, which for the most part they don’t.

To be fair with Bergstrom, in another thread he wrote in response to people bringing up this point about heterogeneity (after he wrote the op-ed in the New York Times), he did address it:

1. Yesterday, @nataliexdean and I published an OpEd in the @nytimes explaining the concept of overshoot: an epidemic doesn't magically stop when you reach herd immunity. https://t.co/DdqwNjP8a6

— Carl T. Bergstrom (@CT_Bergstrom) May 3, 2020

The problem is that what he says in that thread doesn’t even come close to addressing the criticism adequately in my opinion.

What Bergstrom explains in that thread is basically that a colleague of his created a model that does take into account heterogeneity, as well as a bunch of other factors that you’d want a more realistic model to include, but that based on that model he’d “found it remarkable how little the heterogeneity and network structure impact basic [epidemiological] dynamics”. (By the way, nothing I say here should be construed as a criticism of the guy who created that model, who on the contrary was exemplary by putting the code online and writing a very thorough documentation, which is the only reason people have been able to criticize some aspects of the model.) First, that’s extremely vague, he doesn’t say what proportion of the population would be infected according to this model if we just let the epidemic run its course until “herd immunity” has been reached. (Presumably, depending on how the model is parametrized, there must be a whole range of estimates.) So I don’t see how anyone can conclude from such a vague claim that heterogeneity and network structure don’t have a meaningful effect on the estimate.

But perhaps more importantly, this is just one model. I didn’t have time to dig into it, but given the complexity of the phenomenon it’s trying to describe, and how much we have yet to learn about how SARS-CoV-2 is transmitted, I have zero doubt that, if I did, I would find plenty of questionable assumptions. To be clear, that is not to say that it’s necessarily a bad model, that’s not what I’m saying at all. It’s just that, as I explained in my post about the Imperial College model, this kind of exercise requires that you make a lot of non-obvious modeling choices and leaves a lot of degrees of freedom to the researcher. In fact, several people have already criticized the way in which heterogeneity was modeled in that model, so this is not a purely hypothetical concern:

Thanks for the replying, we need this conversation!!

A lot of cool ideas in your model. Great work.

But I don’t think you model heterogeneity in the two relevant ways (more connected=more transmission, and younger=less risk).

Even simple models show heterogeneity matters a lot pic.twitter.com/5zvdOJsE77

— Wes Pegden (@WesPegden) May 3, 2020

Pegden has been raising those concerns for a while now and has made very similar criticisms of the New York Times op-ed, but as far as I can tell, Bergstrom and Dean haven’t responded to him yet.

By the way, when Pegden says that he found that heterogeneity could matter a lot, he is not kidding:

Note that in our modeling with @ChikinaLab, where the only heterogeneity modeled came from empirically measured differences by age group, we have scenarios at R=2.7 with an epidemic ending (after overshoot!) with only ~50% infected.

Are we making a conceptual error or are you?

— Wes Pegden (@WesPegden) May 1, 2020

Note that 50% is what he found with his model after “overshoot” and with a R of 2.7! Now, you may think that 50% instead of 90% doesn’t make any practical difference, but this is hardly obvious. In any case, this is a political question, not a scientific one.

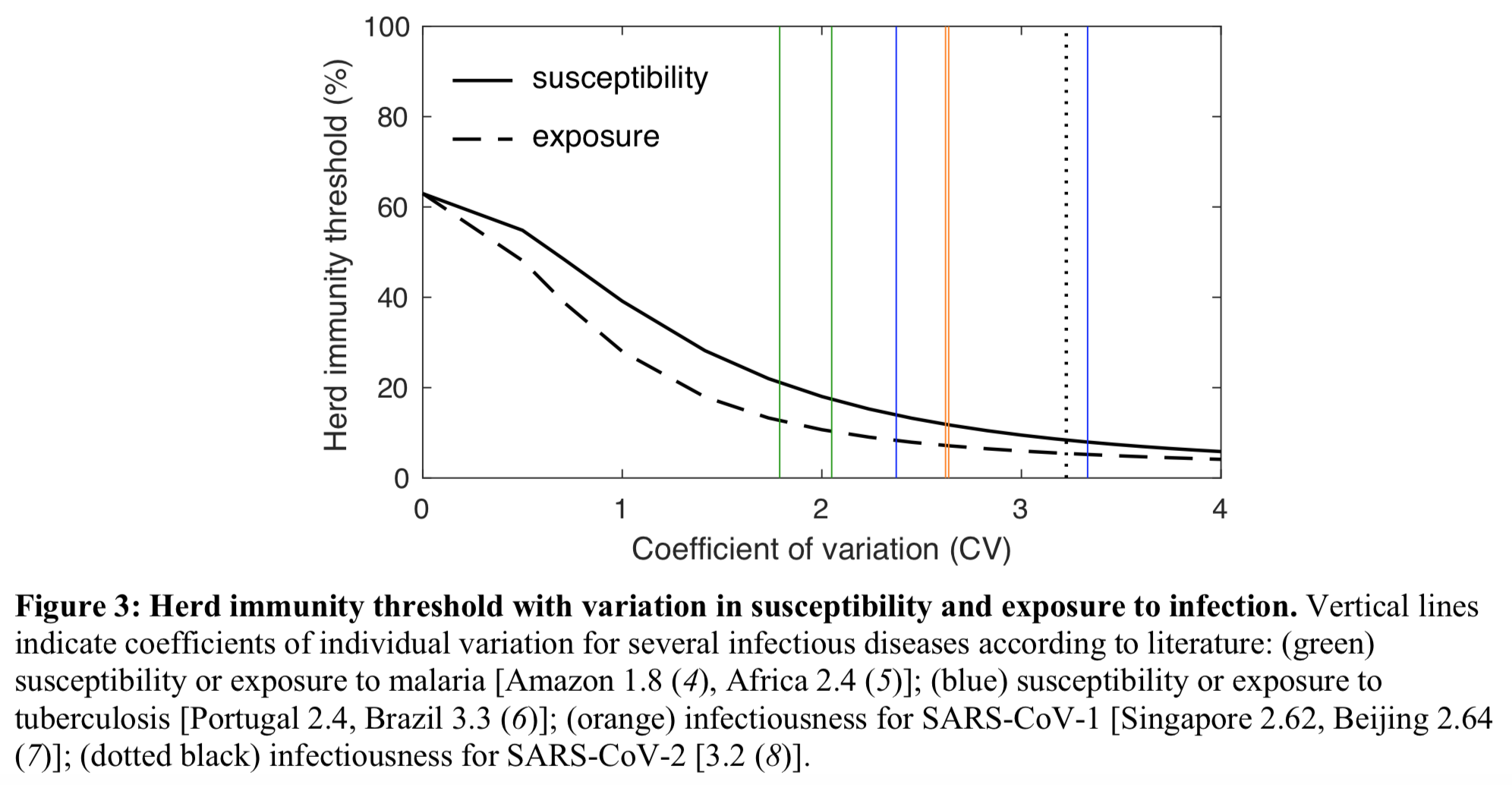

Another team of epidemiologists has just published a paper in which they use a model which takes into account the heterogeneity of susceptibility to infection and they also conclude that heterogeneity in that sense could make a huge difference: As you can see, according to their model, depending on how much heterogeneity of susceptibility to infection there is, the threshold for “herd immunity” could be as low as 20% and even less than that. You’d still have to account for “overshoot”, but that’s obviously very different from the picture that Bergstrom and Dean painted in their op-ed and what the former implied on Twitter in response to people who complained that he didn’t take into account heterogeneity.

As you can see, according to their model, depending on how much heterogeneity of susceptibility to infection there is, the threshold for “herd immunity” could be as low as 20% and even less than that. You’d still have to account for “overshoot”, but that’s obviously very different from the picture that Bergstrom and Dean painted in their op-ed and what the former implied on Twitter in response to people who complained that he didn’t take into account heterogeneity.

To be clear, I’m not saying this model is any good (I have no idea), I’m just saying that Bergstrom dismissed the concerns about heterogeneity way too quickly. Relying on just one model to say that heterogeneity doesn’t really make any meaningful difference is not how you do “science”, that’s how you do bad science, which is not the same thing. If epidemiologists are going to opine on policy, which to be clear I hope they do because they’re still the people who know the most about this, their advice should obviously not rely on any particular model. This is not what people in the climate modeling community, who know a lot about the interface between modeling and policy, have been doing. Obviously, I’m not expecting epidemiologists to put together something like the IPCC report in just a few months, which takes years and a lot of resources. However, I am expecting them not to misrepresent how much uncertainty there is about “herd immunity”, which unfortunately I think many of them have done.

It’s okay if Bergstrom and Dean personally oppose the “herd immunity” strategy and want to make a case against it. They are scientists, but they’re also citizens, who are entitled to defending their views. But since their credentials mean that many people are going to take very seriously anything they say on the issue, they have a responsibility not only to accurately represent the state of knowledge in their field and in particular how much uncertainty there is, but also to clearly distinguish between scientific claims they make because it’s the consensus in their field, scientific claims they make because, although it’s disputed in their field, they find them compelling and finally claims that are not scientific but political. When Dean says that pursuing the “herd immunity” strategy is “insane”, she is not making a scientific claim, she is making a political statement. Again, she is entitled to this political opinion, but she should make clear that it’s what it is, because otherwise a lot of people won’t understand it and will assume that it’s what “science” says.

In fact, even if we knew for a fact that pursuing Sweden’s strategy would result in something like 90% of people getting infected (which we don’t), the question of whether we should pursue such a strategy would still be political. It’s important that scientists understand their proper role, which is not to substitute themselves to normal democratic deliberation, but to give citizens and decision-makers the tools they need to make informed decisions. To be clear, I’m not accusing Bergstrom and Dean of deliberately trying to present their political opinions as guided only by scientific knowledge, nor am I saying that what I’m asking of them is always easy. However, as experts in the field who are followed by a lot of people on social media and who have access to prestigious outlets such as the New York Times to air their opinion, it’s their responsibility to do it. Since the beginning of this crisis, both of them have been invaluable resources for non-experts like me who want to learn more about this stuff, but on the topic of “herd immunity” I think they have failed to fulfill this responsibility.

ADDENDUM: Someone on Twitter replied that, other things being equal, it was better to use simpler models. Given that simple models that assume a randomly mixing homogeneous population have worked well enough with previous respiratory viruses, he went on to argue, we should also use them for this pandemic. The problem is that, as far as I can tell, the naive model didn’t work well with previous respiratory viruses or at least we don’t have any good reasons to think it did. According to this paper, for the pandemic of swine influenza in 2009, R was estimated to be 1.46. This puts herd immunity at ~31% according to the naive model, which means that once you account for overshoot, a significantly higher proportion of the population should have been infected according to this model. Yet the CDC estimates that, between April 2009 and March 2010, only ~19% of the population was infected in the US. So I’d say that, in this case, it doesn’t look like the naive model did very well. It actually looks like it did pretty terribly. And keep in mind that, back in 2009, there was essentially no mitigation, certainly nothing even remotely comparable to what people in favor the “herd immunity” strategy propose. Now, it’s true that the CDC estimates of the prevalence of infection for the swine influenza in 2009 are not based on a random serological survey, but on a model that uses data on flu-related hospitalizations, so I don’t know how much we can trust them. But even if you say that we should totally dismiss them, this just leaves you with the conclusion that we have no idea how well the naive model did in 2009, which isn’t going to help you if you’re trying to defend the approach consisting in using the naive model to guide policy.

ANOTHER ADDENDUM: I was looking for information on the Hong Kong flu pandemic in 1968 and I stumbled on this paper, which reviews the evidence on the attack rate and tries to estimate the basic reproduction number during this pandemic. I haven’t had time to read this carefully, but it seems kind of tricky to find out how well the naive model did in that case, because there were 2 waves and, for whatever reason, it looks as though the basic reproduction number increased when the second wave hit. But the most important point is that, from what I gather (again I just skimmed the paper), estimates of the basic reproduction number are derived from estimates of the final size of the epidemic or its growth rate by a model that doesn’t account for heterogeneity, so if heterogeneity matters a lot the estimates of the basic reproduction number are going to be completely off. More generally, since you need an epidemiological model to estimate the basic reproduction number, it’s going to be tricky to use the method I used in the previous addendum for the pandemic of swine influenza in 2009 to test how well the naive model, or indeed any epidemiological model, did in the past, even setting aside the fact that we often don’t have very good data. I suspect that, if only for this kind of reasons, we don’t really know how well the naive model or any other model did with previous pandemics. Your conclusion about that is going to depend on how you model things, so I doubt this will help settle the debate about heterogeneity one way or the other, but admittedly I’m just thinking out loud here, so perhaps this is wrong.

YET ANOTHER ADDENDUM: Someone in the comments pointed out that, in the US, a vaccine started to be administered on a massive scale as early as November in 2009, which must obviously have slowed down the diffusion of the virus by reducing the proportion of the population that is susceptible, so I can’t really compare how the CDC estimate of how many people ended up being infected with the prediction of the naive model when you assume that everyone is susceptible except people who have already been infected. As I say in my reply, it doesn’t really change anything because the really important point is what I explain in my second addendum, but I still wanted to add a note about it since he’s right that it’s relevant to what I say in the first addendum. (By the way, there are non-parametric methods to estimate the basic reproduction number, but I’m pretty sure they would raise similar epistemological issues.) To be honest, I probably shouldn’t have said anything about testing epidemiological models, because this is a complicated question that deserves a separate discussion and I would have to think more about it.

ONE LAST ADDENDUM: Well, well, well…

While this definitely matters in principle (think STDs) I’ve been skeptical that this is going to make a big difference in the current situation, given realistic contact networks and so forth. @mlipsitch, what do you think we’re missing in this thread?https://t.co/lSPzn2X9ZX https://t.co/sb2DaEiR8j

— Carl T. Bergstrom (@CT_Bergstrom) May 8, 2020

Merci pour cet article ! Tu exprimes très clairement ce que je “ressentais” sur ce sujet sans trop réussir à l’exprimer vu que je suis totalement néophyte en la matière. J’ai parfois eu le sentiment que les épidémiologues, qui vivent un peu leur heure de gloire à travers cette crise, refusaient de prendre en compte les avis et remarques de scientifiques d’autres disciplines, en balayant les remarques sans expliquer vraiment pourquoi ils ne les trouvaient pas pertinentes, ou encore pire en les ignorant totalement alors qu’elles semblent pourtant avoir du sens.

Comme tu le dis également, j’ai beacoup appris ces dernières semaines en lisant leur travail, et je respecte énormément leurs compétences mais j’ai parfois eu l’impression qu’ils réagissaient exactement comme les médecins/urgentistes à qui on expliquait ce qu’était une croissance exponentielle au tout début de la crise en Europe.

The heterogeneity discussion does not necessarily make the overshoot phenomenon irrelevant. Actually, I would argue the opposite in some cases. Assume that the infection propagation is driven by a small group of high-degree individuals very well-connected together, and well connected to the global population (just a toy model to prove my limited point). This makes the herd immunity rate small (once enough of those guys are recovered, which will happen fast, the infection cannot spread). But the overshoot effect will be at the scale of the population as a whole. The still sick high-degree guys will keep infecting their low-degree neighbors until they recover.

My main issue with the Op-Ed is the dismissal of the controlled burn, which is the only feasible course of action for European countries, whether we consciously adopt it, or default to it because lockdowns are over soon, and our attempts at controlling will definitely not work. It will mostly be driven by changes in human behavior, and independent of government policy.

Anyway, the overshoot effect is not an issue in such a setting.

My pet peeve right now is the magical belief that the second wave will be for some reason worse that the first. You can find it among both the lockdown fetishists, as an argument to keep us locked indefinitely, and the lockdown-adverse, to blame politicians who put lockdowns in place.

Yeah I never understood what was the rationale behind “the second wave will be worse!”. Virus mutation like the spanish flu?

Do you actually think that swine flu was halted by herd immunity? Aren’t there other factors which make it a poor comparison with the current epidemic?

You mean like a vaccine that they started using in the USA in that timeframe? They administered 46 million doses by Nov 2009, which is square in the middle of the timeframe being discussed here and it’s odd to leave out any mention of the vaccine (which was supposedly quite effective overall). I don’t know enough to know what that 19% might have been without the vaccine, of course, but it’s weird to not discuss it.

I didn’t know a vaccine had been developed and administered to so many people so quickly, but now that I do, I admit that it’s a good point. The CDC also gives estimates of how many people had been infected at various points between April and November 2009, so in principle we could still check how well the naive model did for that period, but it wouldn’t really show anything one way or the other for the kind of reasons I mention in my second addendum. Still, I’m going to add another addendum about this, since again you are right that it’s a relevant fact.

Actually there’s difference between vaccine for new virus and new influenza. Swine flu is new sub-species of influenza, so the protocol of developing its vaccine is the same as we did in other influenzas every year while vaccine of a new coronavirus is a totally different story.

Yes, I know that, but it’s irrelevant to the point geofflangdale was making, which is that since in 2009 a vaccine started to be administered massively as early as November, you wouldn’t expect as many people to end up getting infected even if you used the naive model.

Re: your addendum 2

In the most naive SIR model, the R_0 can be computed very simply by multiplying the initial exponential growth rate by the average contagiousness time. No need to look at the final attack rate. Bonus: you can observe this early on in the epidemic.

Actually, one could (imo should), independently of the model, define R_0 with this formula.

Then instead of assuming a specific model one can make the rather weak assumption that the final attack rate is a function of this R_0 constant. Observing past pandemics gives us this function.

Assuming that the heterogeneity phenomenon is constant from one pandemic to the other, you have a prediction.

My bet is on a 30% attack rate without mitigation.

Doesn’t herd immunity work best for a herd that’s fenced off from the rest of the world? Isn’t that what’s implied by using the word “herd” rather than “population?”

How is that going to work for a big city? Say NYC gets to the 60% level or whatever is promised to be herd immunity and then opens up again for travelers. Maybe community spread will die out in NYC but if infected tourists and business travelers from parts of the world where the pandemic is still active keep showing up in NYC and coughing on New Yorkers who don’t have immunity, doesn’t that mean that virtually all New Yorkers, not just 60%, will eventually get it (assuming no vaccine, et al)? After all, Times Square advertises itself as The Crossroads of the World.

After herd immunity, an infected individual (local or tourist) infects on average less than one person, and so on. A coughing tourist will give rise to a small cluster that dies out quickly. No flaring up of the epidemic again.

Quick computation: if average cluster size induced by a tourist is 2, you need a constant 3% of tourists being infectious to infect every new-yorker in the next year. But 3% of a country’s population being infectious at any given time is an absurd number. Divide by 10 maybe… Moreover, there will likely be less tourists than in the past, and not all new yorkers are in contact with tourists.

At any rate, everything is very linear now. Halving the number of tourists will half the number of tourist-caused cases, suppressing tourism will suppress them, etc. So this is compatible with slow political decision-making, unlike the exponential phase.

In the end, if very simple prevention techniques are applied (you can’t board a plane if you have a fever), I see this as a non-issue.

Much of the appeal of the Herd Immunity idea is that it implies that you don’t have to get the disease if you don’t want it. If only 60% get it, you can be part of the, say, 40% who never get it!

But what it sounds like is that in the long run, maybe 80 or 90% get it. So your odds of being one of those who never get it aren’t as good as you were told.

That could’ve been edited down to half its length. Not all of us have time for multi-thousand-word essays. Brevity is a virtue!

Thanks, this is very useful stuff. I’ve been blown away by the quality of your posts on virus-related topics.

I am also pretty close to the point of losing faith that any other strategy aside from “let it ride” is going to work out for Western liberal democracies. But a couple things have still kept me from adopting that point of view wholeheartedly. First, on the “unforeseen consequences” side, it isn’t known how long immunity lasts and the cases of apparent reinfection (although they’re probably just relapses) are very concerning.

Second and more importantly: Summer is coming fast, and we don’t know what effects that will have. It may well have the effect of driving down the rate of infection for several months, to the point where we (a) have time to finally build up testing capacity to the point where we can attempt the South Korea-style test-trace-isolate strategy and (b) have a manageable number of infected people to deal with starting in the fall. So I think I still favor measures to aggressively hold down the number of infections until we have some idea what the summer will bring, especially given how much economic damage is a sunk cost at this point.

Well, France, Spain, Germany, Italy, and NYC seem to be smashing it down. So not inclined to your pessimism re liberal democracies. Badly functioning hyper capitalist post Thatcherite nations perhaps (but NYC … the Dutch heritage?)

Thanks!

I don’t have time to reply in details to the points you’re making, but here are a few thoughts anyway.

I’m not very moved by the concerns about immunity, because for various reasons my prior that most people who are infected by SARS-CoV-2 will develop effective and lasting immunity is pretty high (see this thread on Twitter for more on this issue), so I tend to dismiss reports of reinfections as statistical anomalies or problems with the tests.

I like your second argument better, because I do think it’s likely the weather will slow down the virus. However, for this argument to work, you also have to assume a minimal degree of competence on the part of the government and compliance on the part of the population and, while I could be wrong about that, I’m skeptical either of these conditions is realistic.

That being said, I agree that it’s hardly obvious what the best course of action is. There is so much uncertainty about so many relevant facts that I don’t see how anyone can be very confident that any strategy is correct. But this is precisely why I’m annoyed that so many people talk as if there was no room for rational disagreement about this issue.

Hi Philippe ! I guess you might be interested in Japanese strategy against coronavirus. Japan is focused on the cluster strategy which trys to prevent superspreader events and crashing clusters. The theroy is developed from the MERS outbreak experience in South Korea and been validated by the early transmission data in Japan. It is based on the heterogeneity of micro transmission dynamics. So far the strategy seems work and helped to keep cases low in Japan without a mandatory lockdown and massive testing. And here are some paper regarding it.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4769415/

https://www.medrxiv.org/content/10.1101/2020.02.28.20029272v1

Thanks, I’ve long been puzzled by what is going on in Japan, so I will read that with interest.